PostgreSQL and RStudio: I know we match

A Little Tale

Recently at work, I was asked to take data that already existed for a specific project and figure out how to standardize it to the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM). Not only did the data have to conform to this CDM but the values in the database must also follow a specific, validated vocabulary. To give you some background, I work at a medical school where I only work with patient level data and I have never had to normalize the data to any kind of standard. Most of the data I deal with have been primarily collected by the investigator’s team and then used for analysis. Rinse and repeat.

Anyway, after a little (read: a lot) of digging, I think I have it somewhat figured - at least enough so that I can get to work. The OMOP CDM was designed to make disparate clinical data - like the kind I work with - conform in a way that it is easier to perform analysis using standardized tools and share the data and its meaning across applications and business processes. Using a CDM, reduces the pain of having to mush together data from multiple sources and applications. For this particular project, doing that was like forcing pieces of a puzzle together with tape so I am totally on board with a CDM. I think you get the picture, now to the good stuff.

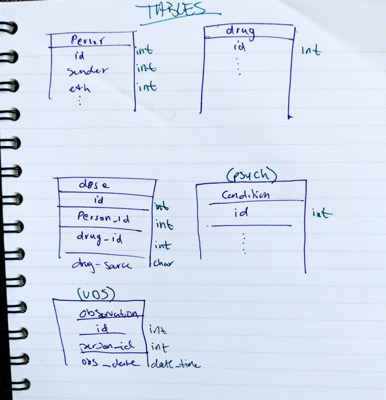

What I started with was dozens of datasets in SAS and R. Data that have already been harmonized and aggregated. Several hours into “backwards-reading” thousands of lines of code to find original values with the schema and validated lexicon side by side I was able to get an idea of what was required and what we had:

Thankfully, OMOP has all the sources I needed to get to work:

- Standardized vocabularies

- OHDSI manual

- Athena OMOP database (ex. Race)

- DDL’s thank foodness for this

- BTW Data Defnition Languages (DDLs), if provided, are great as they establish uniformity within your database. In this case, the OMOP has created standards for the tables and fields, the value types and even the constraints for the foreign keys.

Creating the OMOP CDM Database

Steps (Note: I assume you already have PostgreSQL setup) :

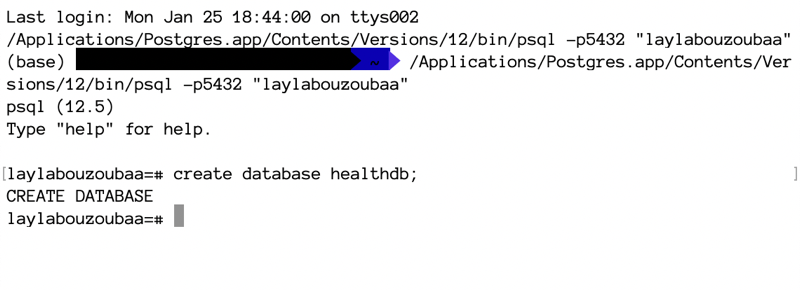

- Create PostgreSQL database

- Open PostgreSQL app

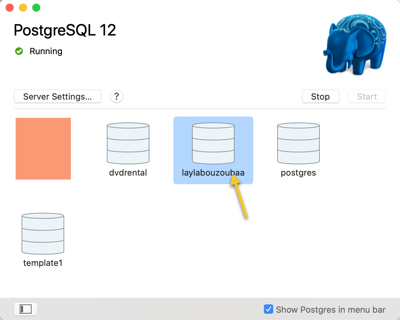

- Double click the database with your name to open the SQL shell

- run

create database healthdb;

- Open PostgreSQL app

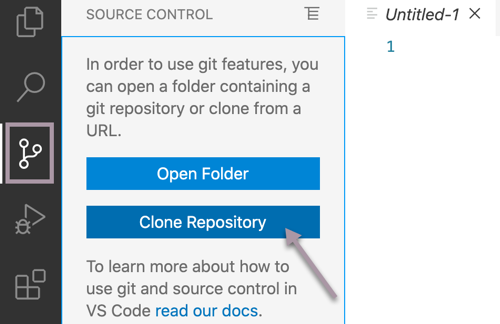

- In a database manager or advanced editor (I <3 Visual Studio Code), clone the repo that contains the DDL for PostgreSQL: https://github.com/OHDSI/CommonDataModel.git. I have a special directory on my machine for repos like this.

The “Source Control” extension comes out of the box when you install VSC for the first time

The “Source Control” extension comes out of the box when you install VSC for the first time - Add the

healthdbdatabase to VSC by navigating to the PostgreSQL Explorer, pressing the+button and following the prompts (Make sure you have the PostgreSQL extension for VSC ) You will need to input the credentials you setup when configuring your PostgreSQL application.

- Once a connection to the

healthdbdatabase has been established, right click the database and select “New Query” and fresh page will appear. - Navigate to the File Explorer where the contents of the the OHDSI/CommonDataMmodel repository are (or where you have another DDL you’d like to use). Copy and paste the contents of

OMOP CDM postgresql ddl.txt, select all, right-click and select “Run Query”

Get ODBC Drivers

Before we can get to working with data in RStudio, we need to make sure that RStudio can interface with our database. RStudio can do this through Open Database Connection (ODBC) drivers. For the purposes of this project, I will need two specific drivers:

- Unix ODBC

- This is neceessary for *nix users as the POStgres ODBC driver on a Mac requires some confg files that does not come with the “out-of-the-box” installation of the PostgreSQL application for Mac

- Steps:

- Download the driver

- Open terminal

cdto downloaded file- Run these commands:

gunzip unixODBC*.tar.gztar xvf unixODBC*.tarcd unixODBC-2.3.9./configuremakemake install

- PostgreSQL ODBC

- Select /src and download the latest tarball

- Unzip

- Run these steps to install the drivers

Finally, the fun part…

Working with a database in RStudio is not so bad - there is a lot of documentation to help you along. For this part we will only need two packages:

library(tidyverse)

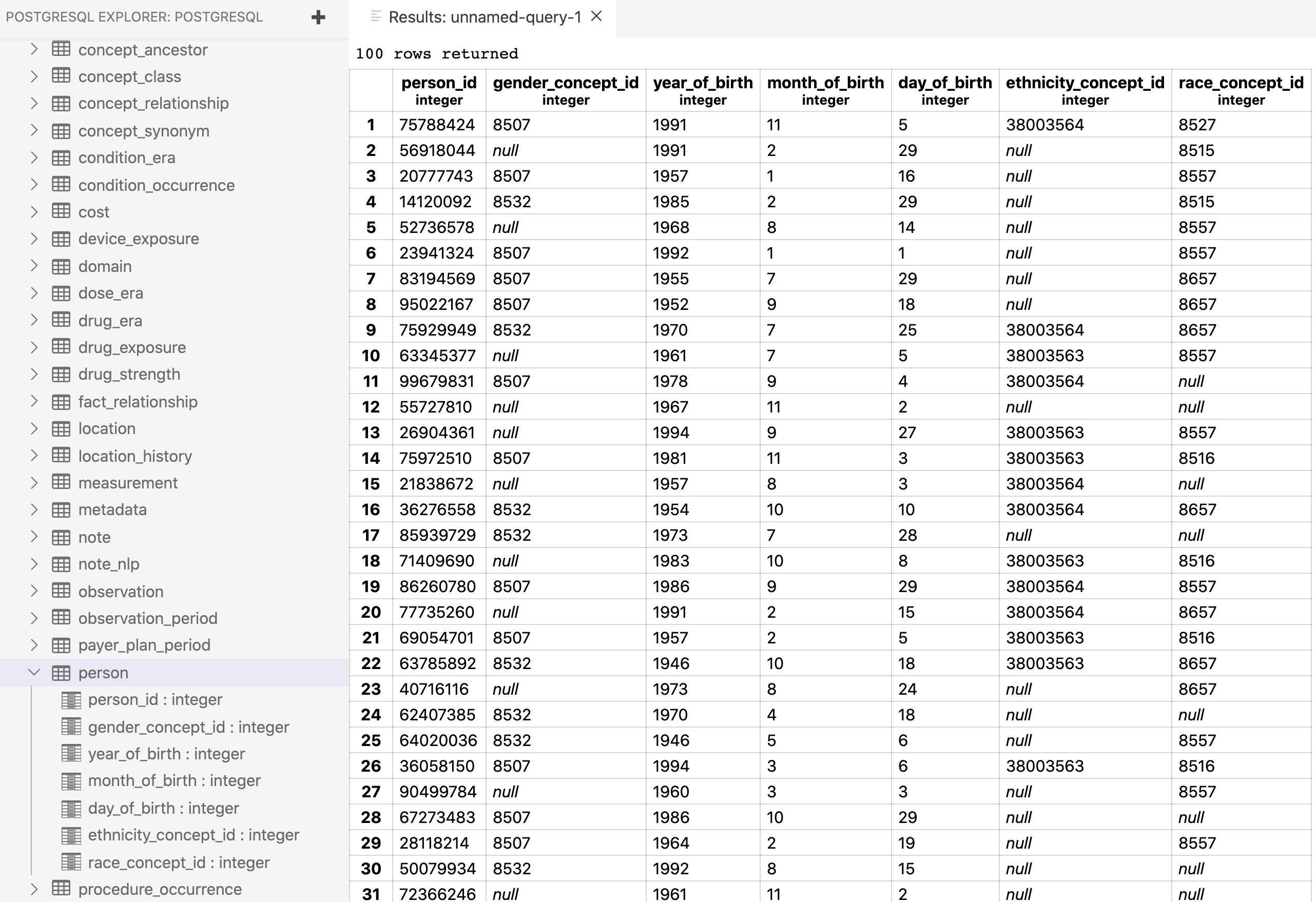

library(DBI)‘person’ table specs

person_id = 8 digit int

gender_concept_id = only two standard concepts: FEMALE (concept_id=8532) and MALE (concept_id=8507)

year_of_birth = 4 digit int

month_of_birth = 1-2 digit int

day_of_birth = 1-2 digit int

ethnicity_concept_id = only two categories for data on ethnicity: “Hispanic or Latino” (concept_id=38003563) and “Not Hispanic or Latino” (concept_id=38003564)

race_concept_id = “White”(concept_id = 8527), “Black or African American”(concept_id = 8516), “AI/AN”(concept_id = 8657), “Asian”(concept_id = 8515), “NH/PI”(concept_id = 8557)

Creating data for the person table

person_id <- runif(100, min = 10000000, max = 99999999) %>% as.integer()

gender_concept_id <- c(8532,8507, NA) %>% as.integer()

year_of_birth <- runif(100, 1945, 1996) %>% as.integer()

month_of_birth <- runif(100, 1, 12) %>% as.integer()

day_of_birth <- runif(100, 1, 30) %>% as.integer()

ethnicity_concept_id <- c(38003563, 38003564, NA) %>% as.integer()

race_concept_id <- c(8527, 8516, 8657, 8515, 8557, NA) %>% as.integer()

#combine it all togather

person <- tibble(person_id = person_id,

gender_concept_id = sample(gender_concept_id, 100, replace = TRUE),

year_of_birth = year_of_birth,

month_of_birth = month_of_birth,

day_of_birth = day_of_birth,

ethnicity_concept_id = sample(ethnicity_concept_id, 100, replace = TRUE),

race_concept_id = sample(race_concept_id, 100, replace = TRUE))

head(person, 25)## # A tibble: 25 x 7

## person_id gender_concept_… year_of_birth month_of_birth day_of_birth

## <int> <int> <int> <int> <int>

## 1 41470777 8532 1947 5 28

## 2 71954847 8507 1977 9 13

## 3 70480354 NA 1986 3 8

## 4 23693378 8507 1965 5 24

## 5 51833113 NA 1981 6 26

## 6 10775969 8532 1965 1 27

## 7 49074903 8532 1948 3 17

## 8 52489188 8532 1980 2 11

## 9 26350094 8532 1951 4 29

## 10 66737271 8507 1976 8 24

## # … with 15 more rows, and 2 more variables: ethnicity_concept_id <int>,

## # race_concept_id <int>Write to healthdb database

# Connect to the default postgres database

con <- dbConnect(RPostgres::Postgres(),

dbname = 'healthdb',

host = 'localhost',

port = 5432,

password = rstudioapi::askForPassword("Database password"),

user = 'laylabouzoubaa')

#test connection

# sql <- "SELECT * FROM person"

# x <- dbGetQuery(con, sql) #successfull

#write `person` to db

dbWriteTable(con, name = "person", value = person, row.names = FALSE,

overwrite = TRUE) #success!!!That’s it! We now have some people in our person table.

Now to fill in the rest…

Now to fill in the rest…